|

| NVIDIA Triton Inference Server |

What is an Inference Server?

The role of an Inference Server is to accept user input data and pass it to an underlying trained model in the required format and return the results. It is also widely known as a Prediction Server as the results are nothing but predictions (in most cases).

The NVIDIA Triton server is a gold standard that standardizes AI model deployment and execution across every workload and it is important to know how it works internally for your custom or off the shelf models.

The steps to install Triton Server are mentioned in detail on this Triton Github page.

There are few important things to know about Triton Inference Server:

- NVIDIA recommends running it as a docker container, so that multiple instances can be run via Kubernetes

- You need to install the NVIDIA Container Toolkit especially if you are planning to work with a NVIDIA server class GPU like A100.

- You can load new ML models on the fly, however NVIDIA does not recommend this as allowing dynamic updates to the model repository can lead to arbitrary code execution. The best way is to restart Triton each time with a command-line argument of the folder which contains the newly added models.

- There is a Prometheus Metrics end-point for GPU, CPU utilization, Memory etc. We can easily send this data to Grafana to create custom dashboards. You can customize the metrics you want

| |

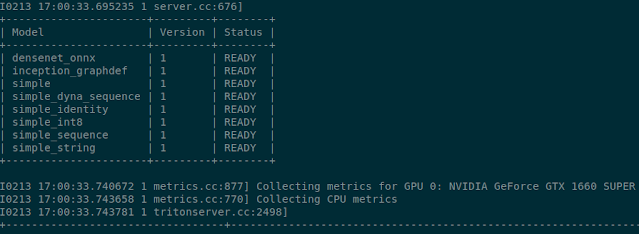

| NVIDIA Triton Server running on commodity NVIDIA GeForce GTX 1660 Super | |

As you can see above, I have successfully deployed the models on the NVIDIA Triton server as discussed on this GitHub page on my Ubuntu Desktop.

0 comments:

Post a Comment

What do you think?.